Engagement with partisan or unreliable news is driven by personal content choices, rather than the content presented by online search algorithms, suggests a study published in Nature, "Users choose to engage with more partisan news than they are exposed to on Google search," co-authored by SC&I Associate Professor of Communication Katherine Ognyanova. Researchers found that the Google Search algorithm does not disproportionately display results that mirror the user’s own partisan beliefs and that instead, such sources are sought proactively by individuals.

It has been suggested that search engine algorithms may promote the consumption of like-minded content through politically biased search rankings, among other concerns. Such suggestions raise concerns about the cultivation of online ‘echo chambers’ that limit the user’s exposure to contrary opinions and further exacerbate existing biases.

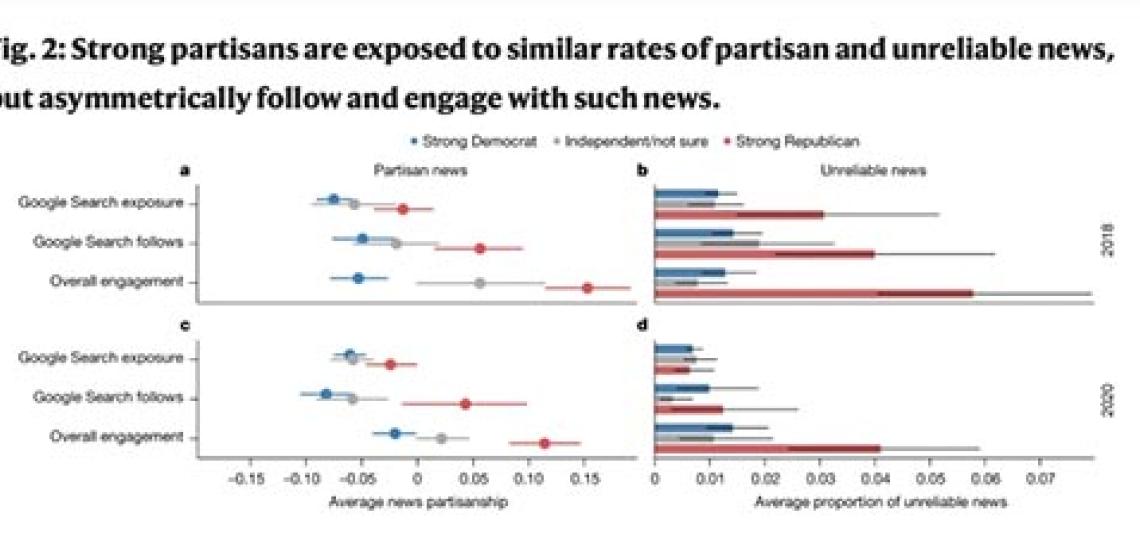

To investigate whether search results influence what people choose to read, Ognyanova and colleagues conducted a two-wave study analyzing computer browsing data during two US election cycles, with 262–333 participants in 2018 (with an over-sampling of ‘strong partisans’ in this period), and 459–688 in 2020. Participants were asked to install a custom-built browser extension that recorded three sets of data: the URLs presented to users on Google Search results pages, interactions with the URLs on those pages, and wider online URL interactions beyond the Google Search results in these two time periods. This dataset includes over 328,000 pages of Google Search results, and almost 46 million URLS from wider internet browsing. The partisanship of sources was scored on the basis of wider sharing patterns among political bases and compared to the self-reported political leanings of participants.

For both study waves, participants were found to engage with more partisan content overall than they were exposed to in their Google Search results. This suggests that the Google Search algorithm was not leading users to content that affirms their existing beliefs. Rather, the authors suggest that the formation of these ‘echo chambers’ could be driven by user choice, rather than algorithmic intervention. It was also found that unreliable news was less prevalent in the URLs derived from Google Searches, compared to both follows and wider engagement, with the largest discrepancy being among strongly right-wing users.

Learn more about the Communication Department at the Rutgers School of Communication and Information on the website.

Read more in Rutgers Today: Are Search Engines Bursting the Filter Bubble?

Image: Courtesy of Katherine Ognyanova

In the Media

News Akmi: People, Not Google’s Algorithm, Create Their Own Partisan ‘Bubbles’

World Magazine: Così il Google Feed risponde alle nostre scelte e ricerche