“What is the meaning of journalism and its function?” Professor of Journalism and Media Studies John V. Pavlik asked ChatGPT. The Chatbot answered him immediately. Its response, Pavlik said, “was three paragraphs that were well written, and made sense, and I basically agreed with it. I thought, even I would have a hard time writing three more coherent paragraphs.”

Intrigued, Pavlik decided to next ask ChatGPT a series of questions designed to elicit much more of its knowledge of the history of journalism. Pavlik wanted to see the types of answers a student might receive from ChatGBT. “Right now, a big fear among educators is that students will plagiarize ChatGPT,” Pavlik said, “so I wanted to pull some research together to help reveal both the positive and the negative aspects of this new Chatbot.”

His analysis, “Collaborating With ChatGPT: Considering the Implications of Generative Artificial Intelligence for Journalism and Media Education,” was published in Journalism and Mass Communication Educator on January 7, 2023.

ChatGPT, which was first launched by Open AI on November 30, 2022, (its development is being partly funded by Microsoft), is a generative AI natural language tool that answers in “complete sentences that seem authoritative and are presented as common knowledge” without referencing its sources, Pavlik said. Revealing its sources may be something it soon does, especially since a competing tool, Perplexity.ai already does so.

As he anticipated, Pavlik’s research identified ways that ChatGPT could possibly enhance journalism and media education, as well as a longer list of ways it might pose problems and even dangers.

A positive, Pavlik found, is ChatGPT could potentially be useful as a reference or search tool to provide background information or ideas, in much the same way students and journalists already use Google search for that purpose.

Comparing ChatGPT to other tools we use to enhance education and research such as Microsoft Excel, Pavlik said, “We use spreadsheets to help us with numbers, so we don’t have to do the math ourselves all the time, and there is nothing wrong with letting the computer do the adding and subtracting.”

Pavlik’s list of potential concerns is much longer, however. At the top of the list are issues concerning plagiarism, ethics, bias, and intellectual integrity.

The possibility of students using ChatGPT to plagiarize is a serious problem, Pavlik said. “The danger is that students, rather than write content themselves, will let ChatGPT write it and then change a few words around so they think they did not literally plagiarize from ChatGPT. That’s where I see the real potential damage.”

Ensuring the intellectual integrity of information sourced from ChatGBT is also critical, Pavlik said. “Whether the lack of integrity is in the form of plagiarism, or the creation of things that are synthetic but are presented as real, it will be essential for the user to ensure the integrity of their work isn’t somehow compromised by ChatGPT.

“ChatGPT users need to thoroughly research any factual assertion and information that ChatGPT makes or provides, double-checking it all for accuracy, completeness, and bias, because there is no way to know without conducting additional fact-checking research whether its response is correct, or mostly right, or really far off.”

Beyond the classroom, Pavlik said he anticipates that unscrupulous actors, whether they are affiliated with governments, political figures, or corporations, will use generative AI tools such as ChatGPT to create content to manipulate public understanding and the views of the public.

These mis-and dis-information campaigns could be subtle but also rampant, he said, because ChatGPT is so fast. An enterprise that perhaps at one time would have required dozens of workers to create fake content, Pavlik said, could now just use one computer and ChatGPT.

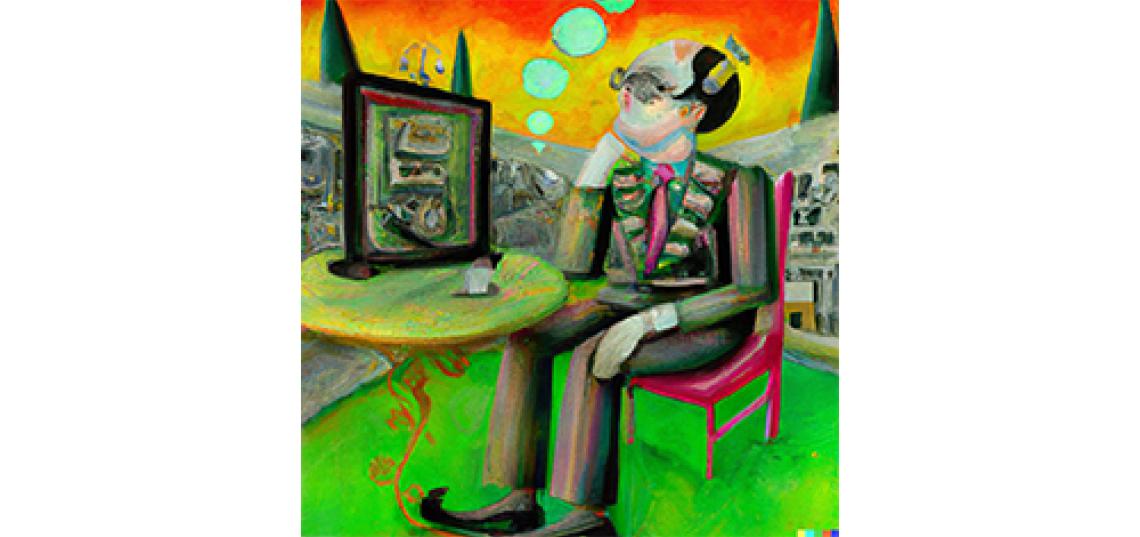

He was inspired to ask ChatGPT the initial question, and then undertake the study, Pavlik said, when he was conducting research on potential bias in the images DALL-E, another generative AI Chatbot, produces. “The news media are already using DALL-E to create content,” Pavlik said. “For example, Cosmopolitan created its June 2022 cover using DALL-E.”

Pavlik noted there are significant differences between ChatGPT and DALL-E. “Every DALL-E image has a watermark embedded in it that indicates it came from DALL-E. Also, one of the stipulations of using DALL-E is users must indicate that they created the image with DALL-E. But with ChatGPT, at least currently, there isn’t a mechanism that builds a marker into the text that can’t be removed. Open AI might be working on that, but even a marker wouldn’t solve the plagiarism, bias, and ethics problems because students could just retype ChatGPT’s content.”

He plans, he said, to share his analysis in his SC&I journalism and media studies courses, including Digital Media Ethics, Digital Media Lab, and Digital Media Innovation.

Addressing ChatBot’s inability (or refusal) to provide sources for its information and opinions, Pavlik said, “In terms of just quoting ChatGPT as a source, my perception right now is that generative AI is going to continue to evolve, but at the moment, most people might be embarrassed to say they relied on ChatGPT. It’s like saying your source is your Amazon Echo.”

Discover more about the Journalism and Media Studies major and department on the Rutgers School of Communication and Information website.

Image: Created by SC&I Professor John V. Pavlik using DALL-E. The text prompt he used to generate the image was: "Surrealistic painting of a Rutgers professor contemplating the impact of artificial intelligence on journalism."