Rutgers-affiliated researchers have built an innovative tool, Collaborative Human-AI Risk Annotation (CHAIRA), that enables humans to work with an AI chatbot to label online comments as either civil or incivil. CHAIRA can help social media platforms, educators, and tech designers build systems that promote healthier digital spaces.

The study, “Collaborative Human-AI Risk Annotation: Co-Annotating Online Incivility with CHAIRA,” was presented by alumna Jinkyung Katie Park Ph.D.’22, an Assistant Professor at Clemson University’s School of Computing, and Associate Professor of Library and Information Science Vivek Singh, at the iSchools 2025 iconference, where the paper was named a finalist for a Best Full Paper Award.

“Online incivility is everywhere—from comment sections to social media—and it affects the way we talk to each other and how safe or welcome people feel online,” Park said. “But finding and dealing with these harmful comments at scale is hard. Our study shows that with the right tools, AI can be a helpful teammate—not a replacement—for people working to make the internet a more respectful place.”

They conducted the study, Park said, “to explore how humans and AI can work together to better identify harmful or disrespectful comments online—what we call ‘online incivility.’ This type of content can be subtle and subjective, which makes it hard to detect consistently, even for trained people. Therefore, we asked: Can AI help humans spot incivility more efficiently and accurately, and how can we best design that collaboration?”

“Online incivility is everywhere—from comment sections to social media—and it affects the way we talk to each other and how safe or welcome people feel online,” Park said. “But finding and dealing with these harmful comments at scale is hard. Our study shows that with the right tools, AI can be a helpful teammate—not a replacement—for people working to make the internet a more respectful place.”

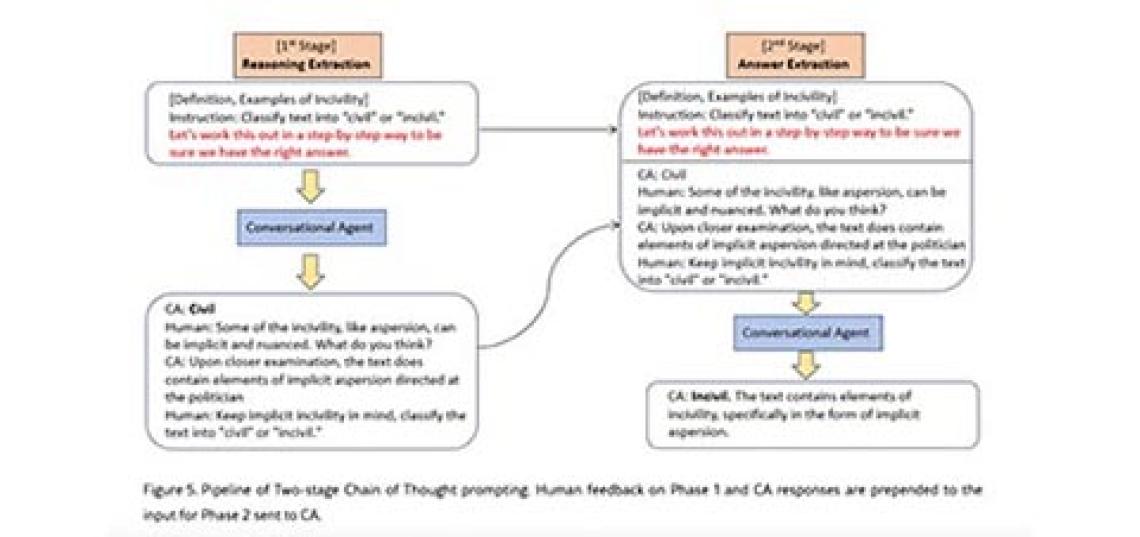

Park and Singh leveraged Large Language Models (LLMs) to facilitate the interaction between human and AI annotators and they examined four different prompting strategies. They then evaluated CHAIRA on 457 user comments with ground truth labels based on the inter-rater agreement between human and AI coders.

They found that when humans and AI collaborate—especially through thoughtful conversation and interaction—they can do a good job of spotting incivil comments.

“Our tool, CHAIRA, lets people talk with an AI agent and refine its suggestions,” Park said. “While the AI sometimes missed hidden or subtle forms of disrespect, it also caught politically charged or complex incivility that people missed. When humans and AI worked together, their accuracy was almost as good as two trained humans working together for an extensive period of time.”

Study co-authors include Rahul Dev Ellezhuthil, a computer science graduate student at Rutgers and Pamela Wisniewski, Director of the Socio-Technical Interaction Research Lab.

Learn more about the Ph.D. Program and the Library and Information Science Department at the Rutgers School of Communication and Information on the website.

Image: Courtesy of Park and Singh

Read more about Park: Alumna Jinkyung Katie Park Ph.D. ‘22 Named Runner Up of the 2024 iSchools Doctoral Dissertation Award.