A new research proposal designed to understand end-users’ expectations of and interactions with social media flagging tools has received funding from the National Science Foundation. The project, “Small: Incorporating Procedural Fairness in Flagging Mechanisms on Social Media Sites” will be led by Assistant Professor of Library and Information Science Shagun Jhaver.

“Online platforms offer flagging, a technical feature that empowers users to report inappropriate posts or bad actors,” Jhaver said. “Through this project I will especially focus on end users who are individuals from marginalized communities, including BIPOC users, LGBTQ+ users, and women, who face disproportionate online harms and, therefore, would benefit from advancements in flagging tools to trigger sanctions against harassment and hate speech.”

Because end users contribute to the flagging data that drives moderation systems, his team will focus on their information and communication needs and propose design and policy solutions that offer users greater voice, agency, and consistency.

“By incorporating these principles,” Jhaver said, “this work will help build more equitable and trustworthy content moderation systems where all users feel safe, heard, and respected.”

Prior research, Jhaver said, has shown that racial, sexual, and gender minorities are more vulnerable to online harm. “Improvements in flagging mechanisms informed by our research will empower these groups to take steps that will help them protect themselves confidently and retain sufficient visibility into the process. This greater sense of control and security will promote their participation in critical, even controversial, online arguments and contribute to digital equality.”

Through the project, Jhaver said he and his team “will provide methodological guidance for enhancing users’ experiences with flagging, a focus currently lacking in substantial empirical literature. We will contribute open-source, interactive prototypes incorporating a principles-based approach to flagging design; examine challenging moral questions about the labor involved in flagging; and the responsibilities of different stakeholders to address online harm.”

Flags play a critical role in maintaining the feasibility of content moderation systems because they are an initial step to identifying content that requires careful review by moderators or automated tools, Jhaver said. “Flagging is one of the few recourses online platforms offer users who experience online harassment or are targeted by hate speech.”

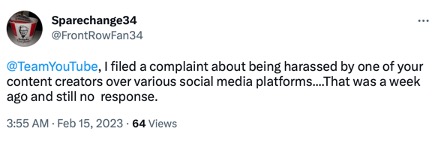

However, Jhaver said, flaws exist in current flagging systems that he and his team will seek to address. “They are currently designed to ask users to elaborate their concerns in ways that align with their content moderation flows. Thus, users end up shoehorning their individualized concerns within a predetermined reporting structure that often falls short of letting victims express their concerns fully and authentically. In addition, once a post is flagged, victims receive little to no information about the flag processing steps or the flag review decisions. Many users also perceive flag reviews as biased against user groups based on their identity characteristics and political affiliations. “

Figure: An example of how content moderation systems frequently fail to provide users with sufficient feedback on the processing of their reports.

The development of new flagging prototypes, which he and his team will develop by co-designing them with marginalized communities, is important, Jhaver said, because “New social media platforms often design their moderation systems ad hoc and from scratch, repeating mistakes that previous platforms encountered and addressed. Our systematic guidance on designing flagging procedures, algorithms, and taxonomies will help new platforms set up flagging mechanisms more efficiently, and guide established platforms in serving a greater variety of flagging-related communication needs.”

In addition, Jhaver said, their work will influence the drafting of legislation that obliges service providers to offer digital tools for reporting illicit content (e.g., the EU Digital Services Act and the UK Online Safety Bill) by clarifying the accessibility and usability requirements of reporting interfaces.

To involve Rutgers and SC&I students in the research, Jhaver will create opportunities for undergraduate and graduate students to collaborate with him and his team. Explaining that he will recruit student researchers from historically underrepresented groups in computing, including women and Black communities, he said, “This goal is feasible because of Rutgers University’s campus diversity: it was ranked third in ethnic diversity among AAU public institutions. At Rutgers SC&I, 40% of Ph.D. students identify as being of non-white ethnic background, and over 75% as female. These students’ self-reflexivity about marginalization and online harm experiences would crucially inform this research through protocol revisions and a more informed understanding of our interview and workshop participants.”

To recruit undergraduates, he will work with the Rutgers Future Scholars program, which includes minoritized, disadvantaged, and first-generation college populations, as well as the Rutgers Aresty Program.

Graduate students, Jhaver said, will be drawn to the project’s relevance to diverse social and technical challenges, and he will incorporate the outputs of this project into his existing Master of Information course Understanding, Designing, and Building Social Media. He is also planning to offer a new graduate course that will focus on content moderation on social media.

“These offerings will disseminate the study’s findings and help improve students’ knowledge of the relevant issues in this space and how to address them,” Jhaver said.

Discover more about the Library and Information Science Department at the Rutgers School of Communication and Information on the website.

Image: Courtesy of Shagun Jhaver